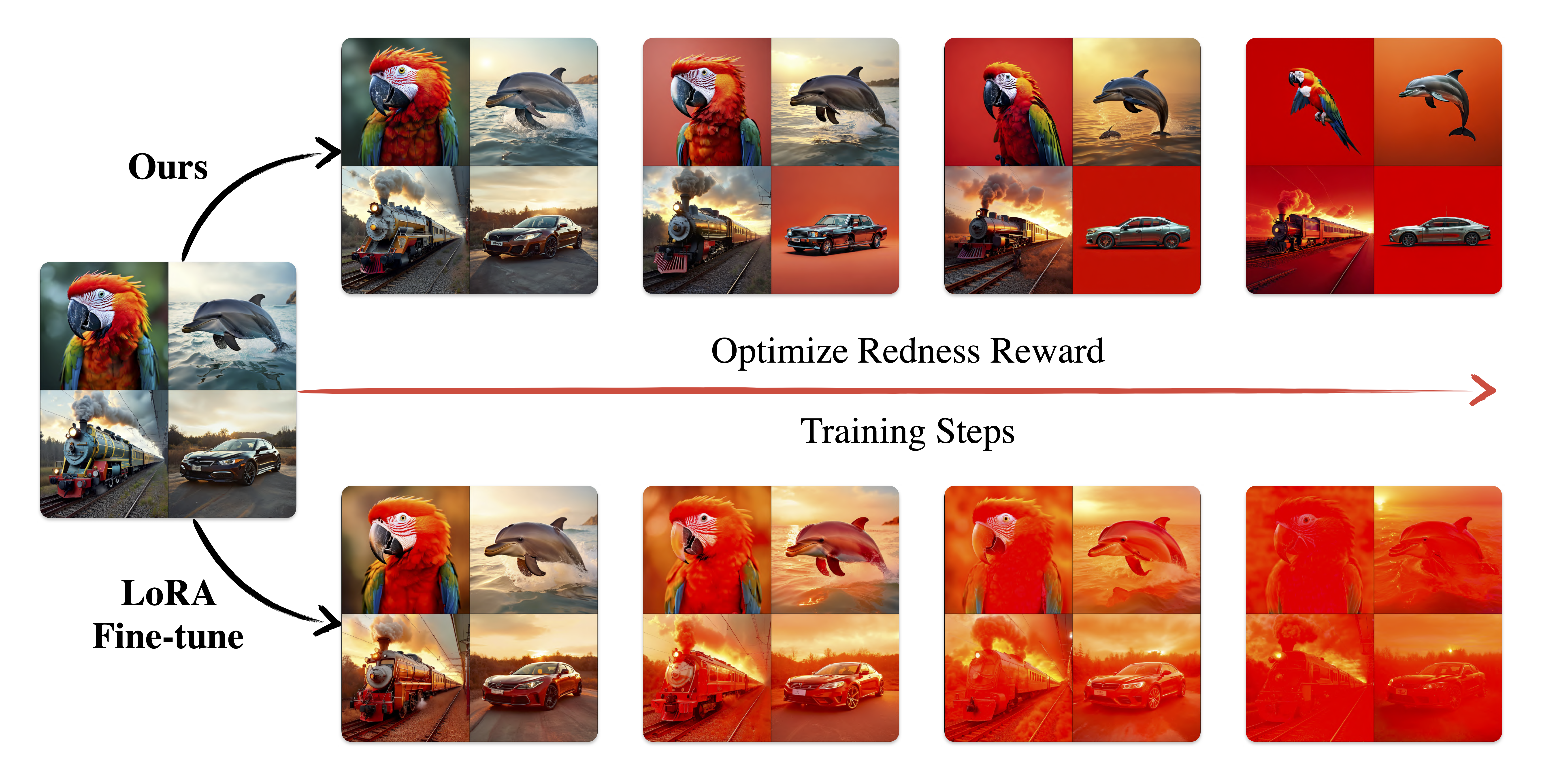

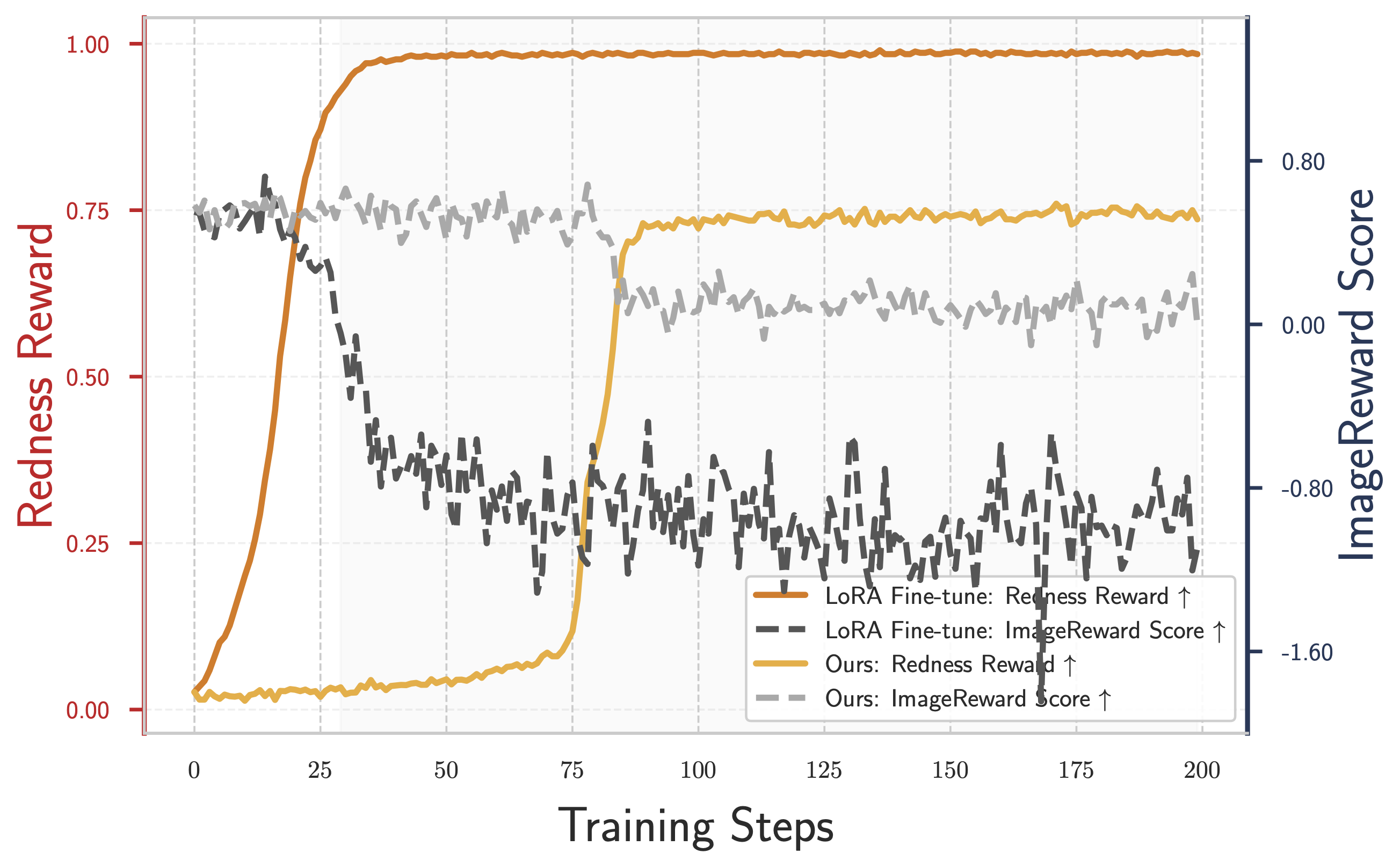

HyperNoise is able to learn the reward-tilted distribution whereby the generated images are more red, while still staying within the natural distribution of images. In contrast, direct reward fine-tuning ends up trivially overfitting to the reward while worsening the quality of the generated images.

@inproceedings{eyring2025noise,

author = {Eyring, Luca and Karthik, Shyamgopal and Dosovitskiy, Alexey and Ruiz, Nataniel and Akata, Zeynep},

title = {Noise Hypernetworks: Amortizing Test-Time Compute in Diffusion Models},

booktitle = {Advances in Neural Information Processing Systems (NeurIPS)},

year = {2025},

}